“Intel actively collaborates with the leaders in the AI software ecosystem to deliver solutions that blend performance with simplicity. Meta Llama 3 represents the next big iteration in large language models for AI. As a major supplier of AI hardware and software, Intel is proud to work with Meta to take advantage of models such as Llama 3 that will enable the ecosystem to develop products for cutting-edge AI applications.”

–Wei Li, Intel vice president and general manager of AI Software Engineering

Why It Matters: Intel's Commitment to Ubiquitous AI

Intel is dedicated to integrating AI into every aspect of technology, investing heavily in software and the AI ecosystem to ensure its products stay at the forefront of innovation.

In data centers, Intel Gaudi and Intel Xeon processors, enhanced with Intel® Advanced Matrix Extension (Intel® AMX) acceleration, provide versatile solutions to meet diverse and evolving demands.

Intel Core Ultra processors and Intel Arc graphics products support comprehensive software frameworks and tools, including PyTorch and Intel® Extension for PyTorch®, for local AI research and development.

The OpenVINO™ toolkit further aids in model development and inference, facilitating deployment across millions of devices.

Running Llama 3 on Intel: Initial Insights

Intel’s initial testing of Llama 3 models (8B and 70B parameters) utilizes open-source software like PyTorch, DeepSpeed, Intel Optimum Habana library, and Intel Extension for PyTorch to deliver cutting-edge software optimizations. For detailed performance insights, visit the Intel Developer Blog.

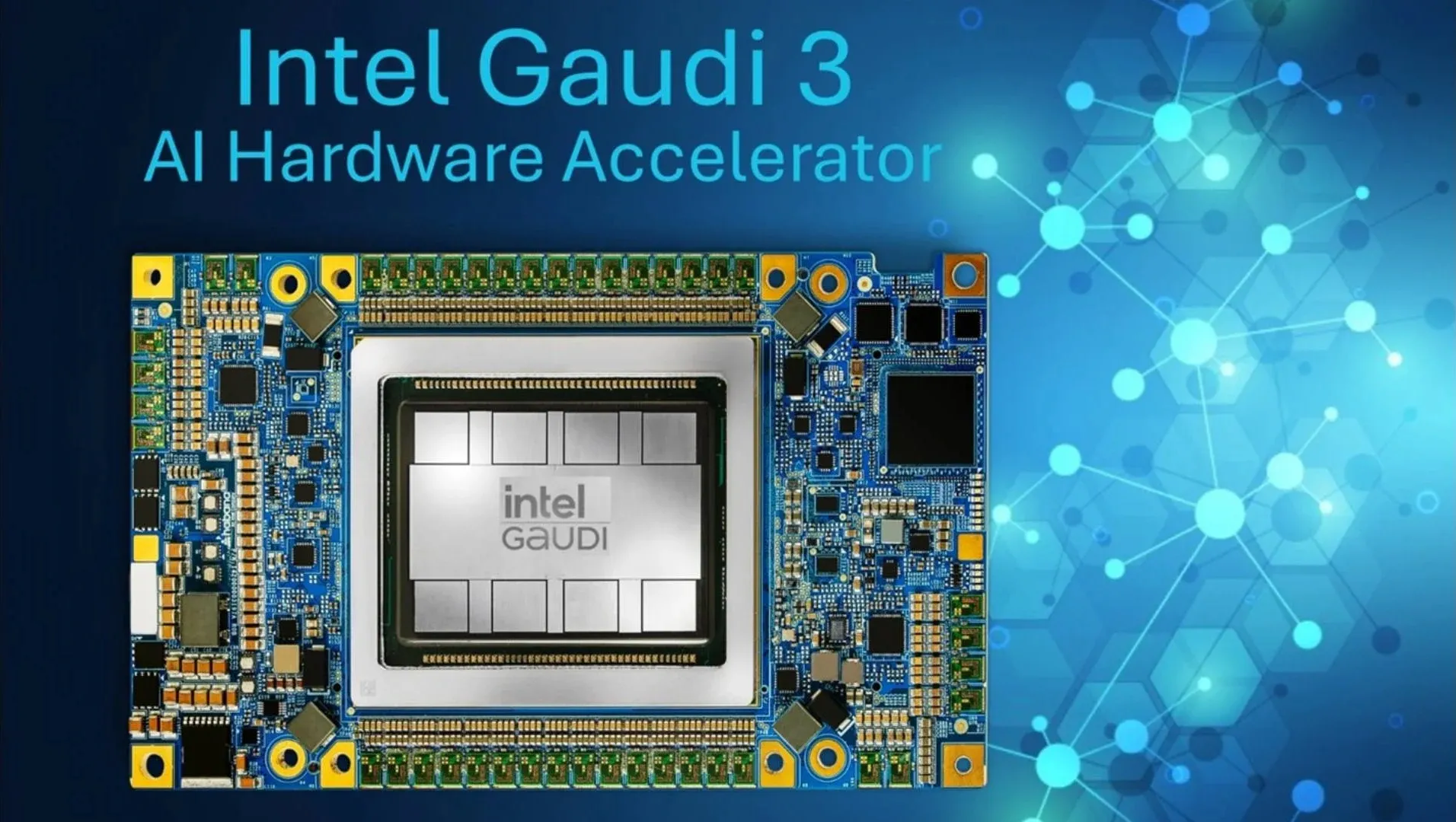

Optimized Performance with Intel Gaudi Accelerators

Intel® Gaudi® 2 accelerators, previously optimized for Llama 2 models (7B, 13B, and 70B parameters), now also support the new Llama 3 model with notable performance improvements in inference and fine-tuning.

The recently announced Intel® Gaudi® 3 accelerator extends this support, ensuring robust performance for the latest AI workloads.

Intel Xeon Processors for End-to-End AI Workloads

Intel Xeon processors are designed to handle demanding AI tasks, with significant investments made to optimize LLM results and minimize latency.

Intel® Xeon® 6 processors with Performance-cores (code-named Granite Rapids) show a 2x reduction in Llama 3 8B inference latency compared to 4th Gen Intel® Xeon® processors, and can run larger models like Llama 3 70B in under 100ms per generated token.

Exceptional Performance with Intel Core Ultra and Arc Graphics

Intel Core Ultra processors and Intel Arc graphics deliver outstanding performance for Llama 3. Initial tests reveal that Intel Core Ultra processors generate text faster than typical human reading speeds.

The Intel® Arc™ A770 GPU, equipped with Xe Matrix eXtensions (XMX) AI acceleration and 16GB of dedicated memory, provides exceptional performance for large language model (LLM) workloads.